High availability for mission-critical software

Why is high availability something users of any mission-critical software need to think about? The simple answer is that if the application is unavailable, its users would be in a world of hurt. Software Consulting Services, LLC (SCS) takes high availability very seriously. Pay special attention if you are using VMWare.

One typical approach for data processing applications is to provide recovery from failures by taking snapshots. Snapshots come in two varieties. Full snapshots are those where an entire copy is made of the current state, i.e. all data managed by the application. Delta snapshots copy over only the differences between one context and the prior one.

Using the full snapshot approach, recovery takes you back to the state when the last snapshot was done. Snapshots taken the night before lose the session work back to the nightly backup should there be some sort of failure. For applications that fail almost never, this method usually provides adequate reliability.

Delta snapshots are different. Like a full snapshot, you are able to get back to the state of the latest snapshot. Unfortunately, the path there involves already having a full backup copy and then applying snapshot deltas one at a time until you have the state of the computation as up to date as it can be.

Delta snapshots are tricky. And as the VMWare documentation (1025279) for "Best practices" states, "Snapshots are not backups. A snapshot file is only a change log of the original virtual disk. Therefore, do not rely on it as a direct backup process."

For snapshots to work, databases need to be quiescent when a snapshot is made. Usually, this implies that the service being provided by an application is interrupted. Choices need to be made that trade mean time to recover (MTTR) against the cost of service interruption. Thus you hear the term "nightly backup" rather than "15-minutes-ago backup."

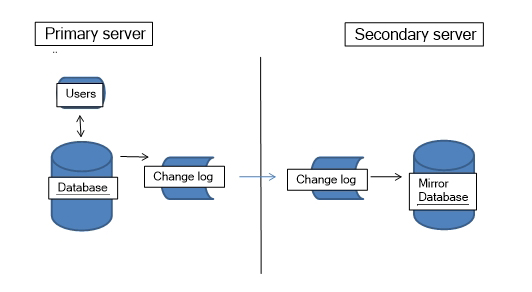

To meet the needs for even higher availability with larger active computational states, our applications keep two (or more) application environments in sync in near real time. We call this “mirroring”. You can switch from one to the other and be assured you will lose little if anything when an application server fails. This is done by log shipping.

Log shipping is a reliable mechanism for building recoverable databases. Here is how it works. Log shipping is built into SCS's data management subsystem. Two separate applications environments are kept in sync. When a record is updated in the primary environment, a transaction is made and passed to the secondary environment. The secondary is always running so it is active and sort of "on standby". It can take over the work by a simple "make primary" command. We contrast active standby availability to a "powered off" backup machine. These are in no way the same in terms of disaster recovery.

It is important that the primary and secondary environments have nothing in common but their network connection. Anything that fails with one should never impact the other. This is called "shared nothing servers." We don't even put these servers on the same UPS! Nothing is shared, not even the underlying data management algorithms. The transmitted log-based transactions have their own updating, consistency and validation logic. This is designed to be both more bullet proof and idiot proof.

Just as a side note – With stable software systems, the actual need for switching to a secondary server may be so rare that we recommend that switching take place on a scheduled basis. Without practicing, it is probable that during an emergency more problems will be created. (You should read the VMWare recovery procedure if you want a really good scare.) I really wish more attention were paid to recovery and high availability issues. Just last month we were helping one of our customers and we found their secondary server was last updated in 2008. An untested and unpracticed disaster recovery plan is no recovery plan at all.

The primary and secondary servers don't need to be in the same location, although since server class technology is so inexpensive these days, both are likely to be deployed in the same server room. (They might be separate VMs on separate servers.) Should enhanced disaster recovery be desired, additional secondary servers can be deployed in remote secure locations.

All the articles and their links starting from this URL are relevant if you wish to dig deeper.

Published:

3-Aug-2015